In the modern architecture of digital marketing, businesses are heavily relying on data-driven methods to strategize their marketing plans. One such method which has been leveraged over centuries is A/B testing. Often, with the emergence of new digital reforms, some practices change over time yet, A/B testing is still one of the core pillars marketers rely on when launching ad campaigns, it indeed is used to help increase conversions by using the most optimal variant. But with every long-standing marketing strategy, this technique has several downsides. In this blog, we will aim to explore some of the pitfalls of traditional A/B testing and introduce game-changing alternatives which can potentially reduce A/B testing costs and time in half while still helping marketers take data-driven decisions.

What is A/B Testing?

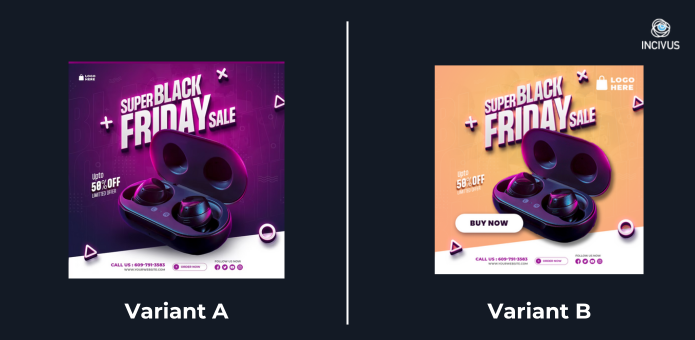

A/B testing, also known as Split testing, is a method in which the performance of a control variant A is compared with another variant B, this comparison could be made in terms of content, landing pages, emailers, headlines, or digital ads. The audience is split into 2 or more groups, and the variant showing higher engagement is taken into consideration.

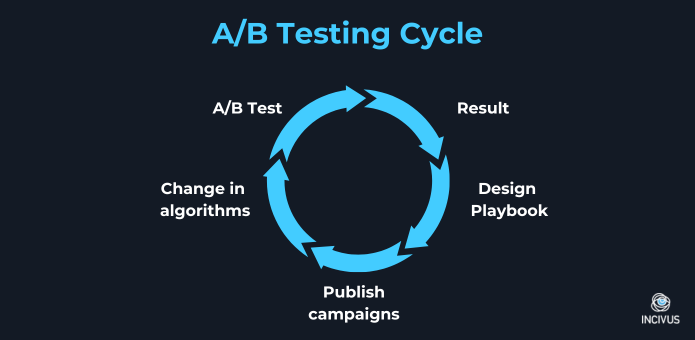

In this article, we will explore how marketers leverage A/B testing in digital advertising. Marketers often use A/B testing to test the performance of headlines, CTAs, images with various elements, colors, ad copy, fonts, logo placement or even music of their digital ad campaigns. The process of A/B testing is tedious and time consuming. Results of an A/B test are derived from a particular campaign or an ad, however, brands often use the A/B test result to craft a design playbook for their future campaigns. The downside of this is that channel algorithms are everchanging which in turn makes brands run more tests to draw a conclusion for a new playbook.

Brands usually set aside 10-20% of their advertising budget to allocate for A/B tests. Sometimes this number could increase with the increment in the number of A/B tests.

In the last 5 years, A/B testing has been replaced by Multivariate testing. Multivariate testing or MVT is when a combination of elements or separate elements are tested against the original version of the ad, essentially making it A, B, C, D or more testing. The audience can be split into 3/4ths, quarters, sixths and so on. This too becomes a costly & confusing process which could potentially increase more tests & more parameters to draw a conclusion.

Understanding A/B testing’s limitations

According to Appsumo’s founder Noah Kagan, “Only 1 out of 8 A/B tests have driven significant change”. Yet this traditional approach is still being considered by marketers in their marketing efforts to engage audiences. Still, there is no doubt that this method has helped marketers gain insights whilst testing their campaigns, it is not free from pitfalls. We have explored some of the most common A/B testing pitfalls.

1. The Big ‘Why’

Marketers often struggle to comprehend why a specific variant resonated more with the audience, whether it was the visual or the text that led to the success or was it a completely different element.

2. Parameters, parameters, parameters

The abundance of variables to test in the long run makes the A/B testing process extremely time-consuming, causing marketers to lose sight of their initial control parameters and leading to the risk of launching content that lacks data-backed insights. The enthusiasm for A/B testing can sometimes lead to an overemphasis on running tests which paralyses marketing teams and curbs their creativity.

3. Two words: False Positives

With numerous variables at play, A/B testing may produce results that appear statistically significant but are merely chance occurrences. Relying solely on coincidence can lead to ill-advised decisions and ineffective marketing strategies.

4. The Obvious Pitfall

A/B testing is time consuming. Running tests, and more iterations to draw conclusions are obviously time consuming but also increases expenditure. In the game of A/B testing, patience plays a vital role in determining sound outcomes.

5. Inability to predict future trends

Traditional A/B testing focuses on the present moment and the immediate response of the target audience. This approach may not accurately predict future trends or evolving user behavior, limiting its long-term impact.

Can these pitfalls be easily avoided?

A/B testing is almost a norm for most marketing teams, and while it has its benefits, its pitfalls are not something that can be ignored. With the advancements in AI and its use in marketing, Incivus has developed an AI powered, creative effectiveness platform that allows marketers to:

1. Save time

Incivus cuts A/B testing time & costs in half! Harness the power of 10mn+ data points which deliver accurate results. Test ad creatives & their iterations within minutes.

2. Make efficient decisions

By eliminating human error, fatigue and bias and allowing AI to provide data-driven decisions, we change how we address the big ‘why’. The creative variable level reports generated by Incivus help marketers understand which variable is leading to the success or the failure of the creative and what can be done to improve it.

3. Empower marketing teams

By lowering the analysis of statistical data, Incivus allows marketing teams to be unburdened by excessive data analysis. Marketers can stay focused on creating effective creatives, ideation, and strategic planning.

Send us an email at marketing@incivus.ai to start your free demo.